Can you run exams on OLab?

Yes, you can. OLab has strong history of providing many forms of assessment.

OLab3 has been used in high stakes, summative exams, as well as in more regular formative feedback.

Why not SurveyMonkey?

For simple surveys and quizzes, there are many alternatives. For testing simple fact recall, lots of tools will do. (Although with the current developments in AI tools, we should all be moving past the testing of fact recall!)

While OLab can be used for surveys and questionnaires, if that is all you are doing, you might be happier using your usual survey tool. We are not trying to be everything to everyone.

There are lots of things that OLab can do that others cannot but the biggest difference is the basic structure. OLab was designed from the ground-up to make it easier to develop complex branching scenarios, to assess decision-making and reasoning processes.

Yes, some survey software, and even PowerPoint, can do simple branching. But they quickly become difficult to use for complex branching, tying you in knots.

What does OLab have to offer?

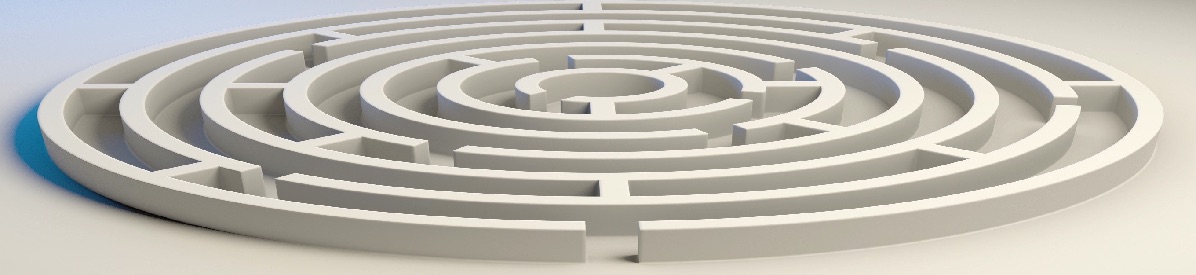

Branching scenarios have been central to OLab’s design model from the outset in 2003. Born as OpenLabyrinth, the software has always been able to support complex, branching design patterns. Initially thought of as cute and fun, this has become central to the analysis of decision-making.

Consequence-based learning: good learning designs have moved beyond the process of testing fact recall (an increasingly irrelevant exercise in the context of today’s ubiquitous information providing devices). We must move away from best-of-five answers, with a perfect correct response buried in a bunch of distractors. We must present reasonable and realistic decision options, where some are more reasonable than others, and then a set of consequences that arise from those decisions. OLab easily supports such learning designs.

We have written about the Directed Acyclic Graph (DAG) model and its power in representing complex decision pathways. It is notable that DAG-based software is now finding application in a number of areas, and that DAG-based analytics open up how we can more intricately explore such decision-making.

Activity metrics: navigating the complex pathways of a well-designed OLab DAG-based scenario creates a steady stream of detailed metrics that are open to a wide range of analyses. OLab captures:

- every click to the millisecond,

- every node visited no matter how briefly,

- every step retraced,

- every question response (even within-page selections and de-selections)

- how long it took to consider,

OLab can use a variety of selected-response and constructed-response options, along with counters to generate scores in a range of parameters e.g. costs of investigations, time waiting for a result, likelihood of effect. All of these internal metrics are captured to a SQL database, which opens up more detailed analytic possibilities using common, standard tools.

Feedback to users can be provided on-the-fly or at the end of the map. Progress counters, running scores etc. In some maps, we can provide a detailed pathway analysis. This is sometimes more intuitive rather than tables of scores and timesheets.

The metrics can also be captured to a Learning Records Store (LRS) using xAPI statements. This allows for federation of metrics storage and analysis, as well as simultaneous capture from multiple tools and platforms such as the LMS, edge devices, mannequins, other simulation software.

Each OLab4 scenario map can have multiple entry and exit points to the DAG, allowing case authors the maximum flexibility in their learning designs. This avoids the creation of simple, boring page-turners, which are all too common in the design of virtual patients: the HEIDR model – history, exam, investigation, diagnosis, Rx.

Role-based Node Access: this is a new and unique feature of OLab4. Depending on the role being played in the map (student, teacher, nurse, social worker, paramedic), certain nodes and pathways can be hidden as off-limits e.g. teacher tips, role-specific activities. In OLab3, this was possible by using complex rules and variables. Whole sections of a map can now be turned on or off easily.

In certain circumstances, the navigation path can be really tightly locked down (including Back Button and its variants). And if the user does figure out a workaround to go where they shouldn’t, every misstep is still traced in the activity metrics. Such audits have withstood the appeals process.

Conditional pathways: another way of controlling where the user can go within the map. This allows for such things as adaptive testing, stratified sampling (yes, we are all tired of endless surveys). There were several ways of doing this in OLab3. This functional area is now being streamlined in OLab4.

Real-time Chat: scenario authors can now integrate TTalk text-based chat into their scenarios. This has been feasible in OLab for about ten years but we now have new learning designs and functions which make this TTalk so much more capable.

Scoped Objects such as Questions, Counters, Constants, Files, are reduce the time in scenario writing. Access is controlled so that others do not ruin your carefully crafted Objects.

Server-level counters, allow for scenario and learning designs where maps and groups of learners can interact with each other in team-based learning. The activities and decisions of one player or team can affect the case portrayal for another team, either in real-time or asynchronously. Teams can be made to compete or collaborate over scarce resources, such as units of plasma in a mass casualty scenario.

Standard stuff for exams

Yes, OLab can do the boring standard stuff as well. You can connect it to your LMS or whatever user-management system you prefer. You can embed OLab into existing Courses, with Single-sign-on.

Yes, OLab uses a template system. This is really quite flexible. You can create a template of a whole exam, or just of certain segments, which you can drop into other tempates or exams.

Yes, OLab uses themes. You can alter the appearance completely, even emulating other exam systems for generating practice cases. You can have a different theme for each map. You can even change themes from node to node.

Yes, OLab can support exam-specific context-sensitive help. We use GitBook, with its excellent version control to support outside help materials.

Yes, OLab can support H5P widgets, which are very popular with some scenario authors.

Yes, OLab can support a wide range of question types:

- single line free text

- multi line free text

- single choice (radio buttons)

- multiple choice (check boxes)

- drag & drop

- sliders

In OLab3, we supported Script Concordance Testing, Key Feature Questions, and Situational Judgement Testing. These can be reintroduced if requested.

If you have questions, please fill out a survey… ah, no. Give us a call!